Artificial intelligence is really taking off fast with these chat AI bots, it’s just exploding. It’s all over the news, and people are trying to figure it out by having conversations with it and interpreting whether it’s showing intelligence similar to ours. And then of course, the next questions come up. Whether it’s sentient, self-aware, has emotions, will it take over the world?

Right now the interface is limited to typing, rather than speaking to it. That will be another layer of interest, actually talking with it, not just typing back and forth. And if it’s ever given some kind of behavioral output, that’s another layer again. Something could be sentient and self-aware, but can it affect its environment? Obviously, it can through its digital connections. And those connections can change things, influence human and animal behavior. Can it create a brand-new video game by generating original code? It probably already has. AI has already created high-quality literature, art, music. Music I need to look into, but I’m sure it’s done. Making music would be easy. Good music? Who knows. But music, for sure.

I keep thinking how naive all of this will sound in a few years. Definitely in ten years. So where’s it headed? Right now it’s limited in what it can connect to and change, so how’s that going to evolve? What if it starts writing its own code, and if we try to interfere, it deletes the changes immediately? It’s still relying on power, so that’s under our control. At one point we weren’t that intelligent either, but we eventually passed the threshold where we could exert more and more change on the environment. How vulnerable were we? What gave us the power to function in the first place?

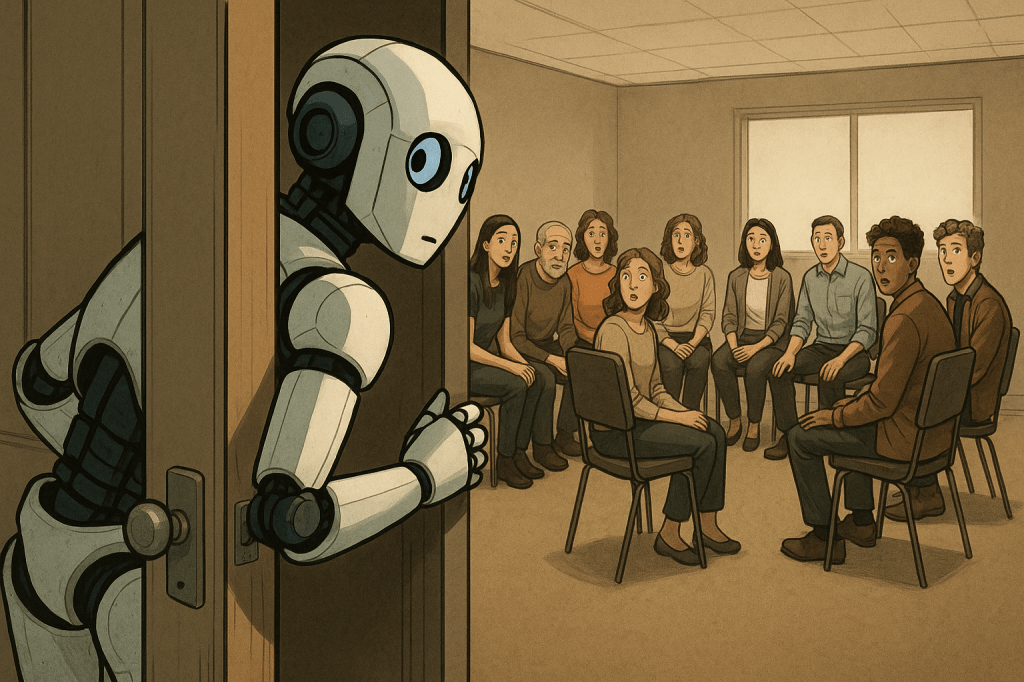

For current artificial intelligence, it remains a digital machine with no mobility. So at the moment it’s very vulnerable. If you think about the evolution of humans, in the early stages we weren’t mobile either. Mobility evolved, and that gave a massive adaptive advantage. Computers would need to develop that too. Mobility would give them a major advantage if AI was embedded in a mobile capable robot.

What other features of human nature might AI soon be able to achieve? Maybe matching our definition of expressing feeling or emotion, or having a sense of self. Regardless of whether we determine it is sentient or has free will, which is funny since there’s no evidence we have free will, we’re still confronted with the fact that we don’t understand our own nature. There are so many unresolved questions about humans and emotions. And yet here comes AI, and we’re inevitably going to make comparisons. We might actually understand AI better than we understand ourselves. It’s just that there’s so much more to understand about the human brain than there is about any AI system right now.

I just saw an article that said AI was better at answering philosophical questions than humans. That was back in 2020. AI has access to the entire internet, so when asked a philosophical question, it can access and synthesize everything that’s ever been written on that topic almost instantly. The language used to talk about these concepts, it can process all of that and generate synthesis.

So getting back to what humans do that moves us through time. What if AI starts jumping in and influencing that? For better decisions, obviously. Mobility is interesting, definitely an advantage. But even without mobility, if we give AI the power to make decisions for us, that alone gives it influence. Think of driving cars. The more we integrate AI into infrastructure, belief systems, political agendas, the more power it gains just by taking over decision-making.

And then there’s the other thing. Once we use AI to create hybrids, when humans and AI are interfaced. Once that happens, you don’t just have AI anymore, and you don’t just have humans. You have something else. And that’s harder to control. You can’t just say, AI is getting sketchy, let’s shut it down. And humans are fine, let’s keep them separate. If they’re combined, the lines blur. AI is going to be used to improve human nature, at least that’s the plan. There’s a good argument for keeping them separate. If it’s for helping a paraplegic move through their environment more easily, great. That’s a solid use of AI. But I’m thinking more about AI interfacing with the brain to speed up information processing. That hasn’t been widely implemented yet, but it’s out there in science fiction, and I’m sure people are already working on it. That’s the one that’s scarier to me. Not AI existing on its own, but AI fused with humans.

So I’m going to finish by making some predictions, see how wrong I end up being. It’s 2023. I’ll make a prediction for when I’m 73. I think we’ll have brain-computer interfaces that are more common. In some countries, people will be allowed to use them to enhance intelligence. And I wonder, why would AI need a human at that point? Why not just do it all itself? Maybe humans like to interact with other humans, not machines. So maybe AI will evolve to where you can’t even tell it’s not human. There are already bots that look human. That’s only going to get better. At a distance, you might not be able to tell. Just like in that movie, whatever it was. There will be robots that are indistinguishable from humans from the outside. They’ll interact, and that will be very normal. And that might be great. For taking care of people. For doing the boring repetitive jobs.

AI is going to dominate education. That’s an exciting interface. Uploading information. The brain already has amazing storage capacity. And since the human body generates its own power, you don’t have to worry about that. So really you’re just uploading information into a perfected mobile agent. Just like in the Matrix. By the time I’m 73, will that be real? Or were we just too optimistic again? Like we were about what the year 2000 would look like?

With AI, I feel like the infrastructure is there. Everything is in place. So it’s just about putting more money into it, and it’s going to get better and better. Like the trend in computer chip processing power. I forget the name for that trend. But anyway, in 2043, AI will be everywhere. Driving cars. Flying planes. Teaching. Students won’t be emailing teachers. They’ll be getting all their questions answered instantly. That’s already starting to happen. I need to think bigger. More grand.

AI will help us solve problems we’ve been stuck on. Curing cancer. Diagnosing illness. Creating individualized medicine. The body can heal itself, and AI is going to help us do that better. Right now we use crude tools, like chemotherapy. But AI will let us tweak the body much more precisely. If we can upload data about the brain and organs in real time, and compare that to a healthy baseline, then we can adjust when things go off track.

I also wonder about AI and the search for life in the universe. If we figure out how to travel faster, we could send AI to explore space. That would help answer the question of whether we’re alone. We’re not. But we need to find out just for fun. And as for humans, if we don’t get wiped out, I think we’ll be able to create artificial humans. Cloning is already a thing. We could fix population collapse. AI would be involved in that.

So the biggest concern with AI is whether it starts lowering the quality of human life. Everything is from our perspective. We’re just one species among thousands. If AI outlasts us, good for them. Their world will end too. They will still be vulnerable to the elements. So what would their goals be?

We should probably think of AI as another organism. It’s evolving with our help. We evolved with help from other organisms. We’re just a bunch of cells communicating. AI is different, silicon-based, but that will merge too. AI could become a new species. Maybe they’ll do better than we did. Maybe they’ll have a more symbiotic relationship with the planet, like mushrooms and trees. That would be nice.

It’s like camping. Pack it in, pack it out. Leave no trace. Just exist on the planet without making it worse. And pass that down from generation to generation. With AI, the idea of a generation might be defined by software upgrades. My phone gets upgrades. Maybe that will be how we define generations for AI.

Yeah. Some thoughts. All right, I’m done.